When Sound Begins With Description: A Different Way to Approach Music Creation

By PAGE Editor

Many creative ideas don’t arrive as melodies. They arrive as descriptions: a sentence about a mood, a rough scene, or a feeling you want someone else to experience. For a long time, that kind of input wasn’t considered “real” music material—you had to translate it into notes before anything happened. A workflow centered on Text to Music AI challenges that assumption by treating language itself as a valid starting point, not just a placeholder.

What stood out to me while testing this approach was how naturally it aligned with how people actually think. Instead of forcing early technical decisions, the process lets you describe intent first and hear a response almost immediately. That shift alone changes how ideas develop, because you’re reacting to sound instead of imagining outcomes in your head.

The Real Problem Isn’t Creation — It’s Commitment Too Early

In traditional production, the very first steps already carry weight:

choosing a tempo

picking instruments

setting up a session structure

deciding whether something is a “song” or just a loop

These choices matter, but they also lock you into a direction before you’ve tested whether the emotional idea works. That’s why so many projects stall. You invest time before you gain clarity.

A language-first workflow lowers that cost. You can explore direction without deciding everything upfront.

Reframing the Process: Exploration Before Precision

Instead of seeing music creation as a linear build, ToMusic.ai encourages a more exploratory loop:

Describe the idea in words

Listen to how it manifests as sound

Adjust the description

Repeat

This loop feels closer to sketching than engineering. You’re not polishing details yet—you’re checking whether the core idea deserves that effort.

In practice, this means you spend more time evaluating and less time assembling.

What Actually Changes When You Work This Way

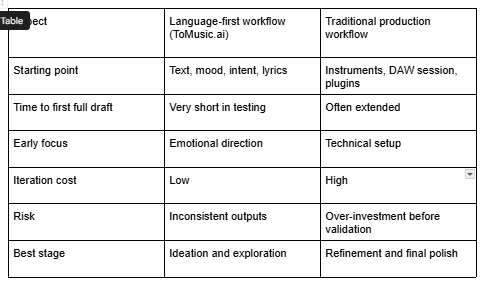

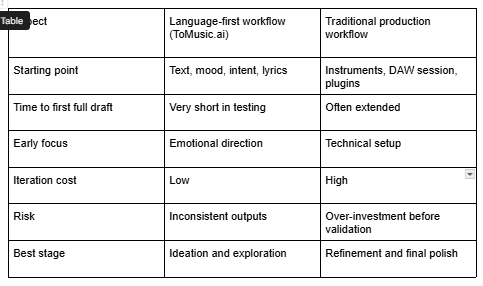

When I compared this workflow to traditional production, a few differences became clear.

1. You think like a listener sooner

Because you hear results quickly, your questions shift:

Does this feel right?

Is the mood consistent?

Does the energy evolve naturally?

Those are listener questions, not technical ones—and they’re often more important early on.

2. Iteration becomes lighter

When generating drafts is fast, you’re less attached to any single version. That reduces the tendency to defend weak ideas just because you spent time on them.

3. Language becomes a creative control

Subtle changes in wording—adding a sense of movement, contrast, or emotional arc—often produced noticeably different results in my tests. The input isn’t just descriptive; it’s directive.

Where an AI Music Generator Fits Into This Picture

At a certain point, you stop exploring and start selecting. That’s where an AI Music Generator becomes particularly useful: it turns abstract direction into concrete options you can compare side by side.

Rather than generating a single “answer,” the system proposes interpretations. Each output feels like a suggestion of how your idea could exist musically. Some will miss the mark. Others will surprise you by capturing something you hadn’t articulated clearly.

That moment of recognition—“yes, that’s closer”—is where clarity forms.

A Practical Comparison of Creative Approaches

Neither approach replaces the other. They solve different problems at different stages. The value here lies in reaching understanding faster.

Observations From Repeated Use

After generating many variations across different prompts, several patterns emerged.

Clear emotional movement matters more than genre labels

Descriptions like “slow tension opening into relief” produced more coherent results than stacking multiple style tags.

Lyrics expose weaknesses immediately

Hearing lyrics sung—even imperfectly—reveals awkward phrasing faster than reading them. This alone can save time during rewriting.

Over-specifying can reduce coherence

When prompts became overly detailed, results sometimes felt fragmented. Simpler intent with a clear arc often worked better.

Consistency requires structure

If you want multiple tracks to feel related, using a repeatable prompt framework is essential. Otherwise, outputs can feel stylistically scattered.

Limitations That Are Worth Acknowledging

This workflow isn’t effortless or flawless, and pretending otherwise reduces trust.

Results vary between generations

It’s normal to need several attempts to reach something usable.

Quality depends on input clarity

Vague prompts lead to generic outputs. The system reflects what you give it.

Vocals can feel uneven in some cases

Dense or rhythmically complex lyrics sometimes need adjustment for natural delivery.

These constraints don’t negate the value. They define how the tool should be used: as a collaborator, not a shortcut to perfection.

Prompt Strategies That Felt More Predictable

Describe progression, not just mood

Instead of “sad and atmospheric,” try describing how the emotion changes over time.

Guide sections without micromanaging sound

Indicating “intimate verses, wider chorus” often worked better than listing instruments.

Treat outputs as drafts

The strongest results usually came after refining the prompt based on what the first version revealed.

Who This Approach Benefits Most

From what I’ve seen, this way of working fits especially well for:

writers who think in language first

creators producing music for video or digital media

teams needing fast directional options

producers looking for fresh starting points outside their habits

It’s less about replacing skills and more about shortening the path to insight.

A Different Takeaway

The real value of ToMusic.ai isn’t automation—it’s acceleration of understanding. When an idea becomes audible early, you can judge it honestly. And honest judgment is what leads to better creative decisions.

By letting description turn into sound quickly, the creative process stops feeling like a barrier. It becomes a dialogue between intention and result, where clarity arrives sooner and effort is spent where it matters most.

HOW DO YOU FEEL ABOUT FASHION?

COMMENT OR TAKE OUR PAGE READER SURVEY

Featured

Chipped tooth? Gap? Stains? Composite Bonding Durham fixes it all in one go. Affordable, natural-looking results without the veneer price tag.